Principal component analysis¶

If we have many features, odds are that many are correlated. If there are strong relationships between features, we might not need all of them.

With principal component analysis we want to extract the most relevant/independant combination of features.

It is important to realise that PCA only looks at the features without looking at the labels, it is an example of unsupervised learning.

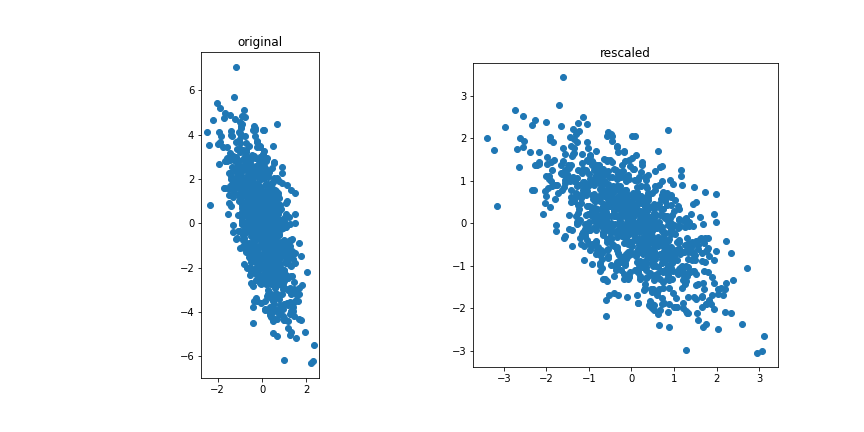

Correlated vs uncorrelated¶

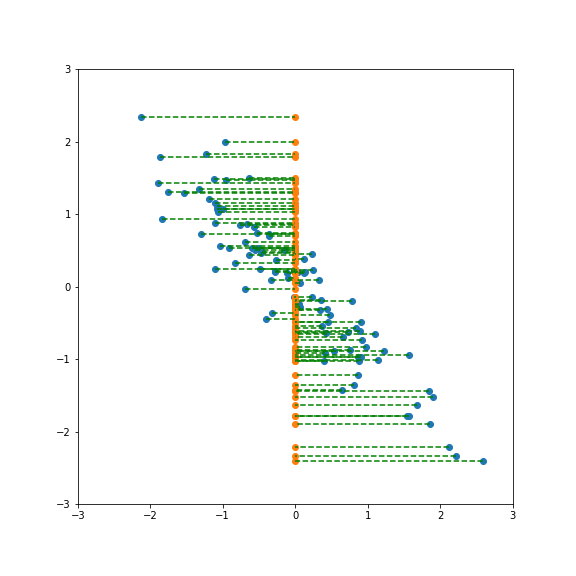

Correlated features

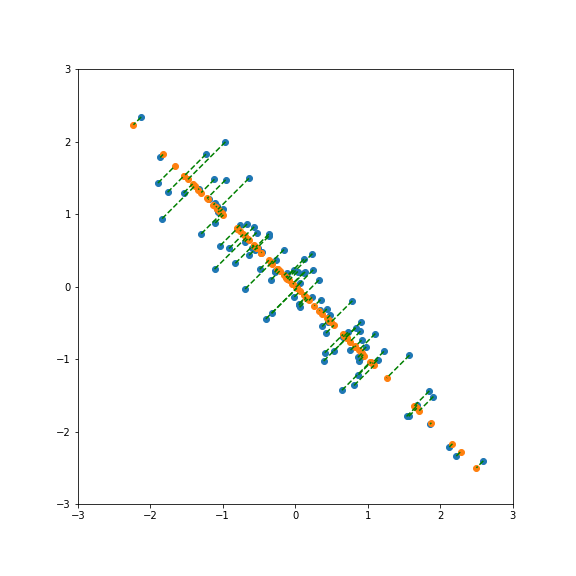

Uncorrelated features:

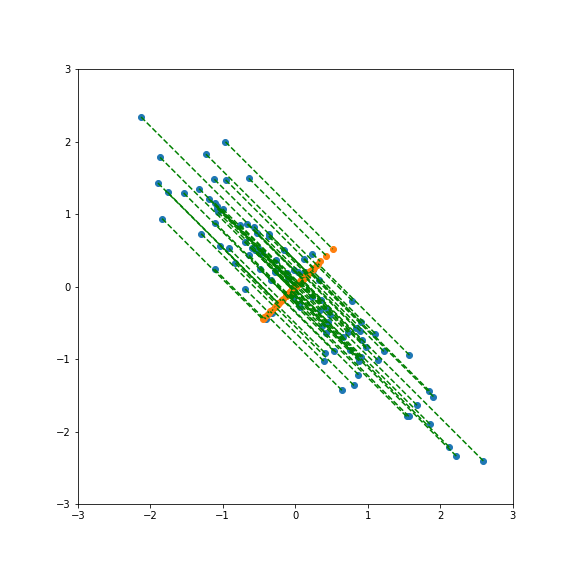

The idea for PCA is to project the data on a subspace with fewer dimensions.

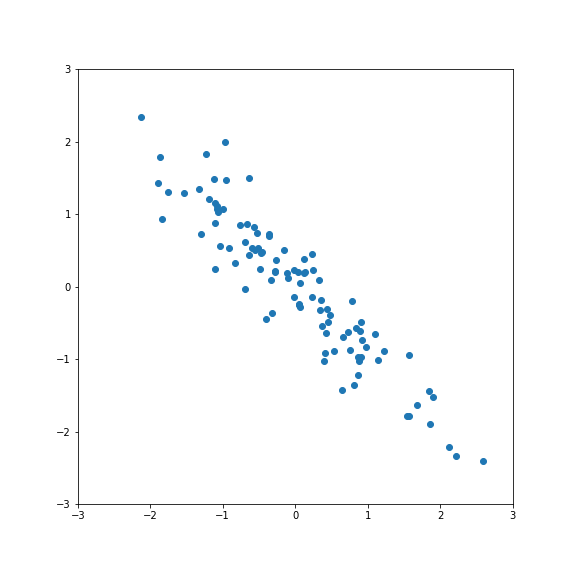

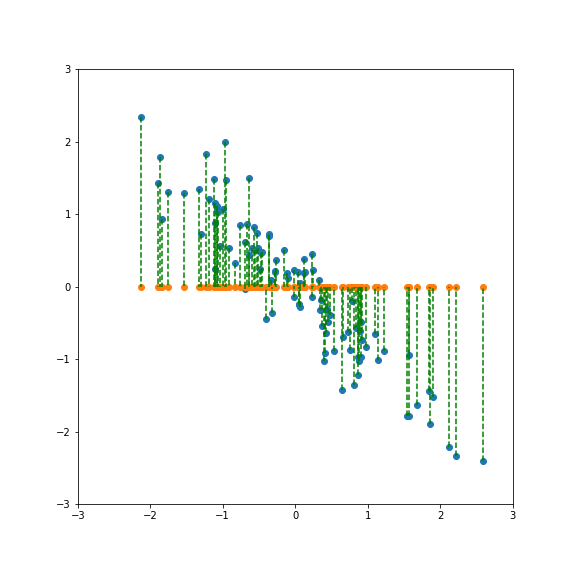

The data is standardised.

If we project onto the first component we get variance 1:

If we project onto the second component we also get variance 1:

But projecting onto a different direction gives a different variance, here larger than 1:

And here smaller than one:

Performing PCA gives a new basis in feature space that include the direction of largest and smallest variance.

There is no guarantee that the most relevant features for a given classification tasks are going to have the largest variance.

If there is a strong linear relationship between features it will correspond to a component with a small variance, so dropping it will not lead to a large loss of variance but will reduce the dimensionality of the model.

Finding the principal components¶

The first step is to normalise and center the features.

$$ x_i \rightarrow a x_i +b $$such that

$$ \langle x_i\rangle = 0 \;,\qquad \langle x_i^2\rangle = 1$$The covariance matrix of the data is then given by

$$ \sigma = X^T X $$If $X$ is the $n_d\times n_f$ data matrix of the $n_d$ training samples with $n_f$ features. The covariance matrix is a $n_f\times n_f$ matrix.

If we diagonalize $\sigma$

$$\sigma = S D S^{-1}$$where the columns of $S$ are the eigenvectors of $\sigma$.

the eigenvectors with the highest eigenvalues correspond to the axis with the highest variance. PCA reduces the dimensionality of the data by projecting unto the subspace spanned by the eignevectors with the $k$ highest eigenvalues.

$$ X_k = X S_k$$where $S_k$ is the $n_f\times k$ matrix composed of the $k$ highest eigenvectors.

Singular value decomposition¶

There is a shortcut to computing the full data variance to calculate the principal component analysis. Using singular value decomposition, we can write $X$ as

$$X = U \Sigma V $$where $U$ is an orthogonal $n_d\times n_d$ matrix, $\Sigma$ is a $n_d\times n_f$ matrix with non-vanishing elements only on the diagonal and $V$ is a $n_f\times n_f$ orthogonal matrix.

using this decomposition we have

$$ X^T X = V^T \Sigma^T U^T U\Sigma V = V^{-1} \Sigma^T \Sigma V $$The combination $\Sigma^T \Sigma$ is diagonal so we see that the matrix V is the change of basis needed to diagonalise $X^T X$. So we only need to perform the SVD of X to find the eigenvectors of $X^T X$.

Data sample variance¶

The total variance of a data (zero-centered) sample is given by

$$\sigma_{tot} = \left\langle\sum_i X_i^2\right\rangle = \frac{1}{N-1}\sum_{sample}\sum_i x_i^2 = \frac{1}{N-1} {\rm Tr} (X^T X) \;.$$The trace is invariant under rotations in the feature space so it is equal to the trace of the diagonalised matrix ${\rm Tr}(\sigma)=\sum E_j$ where $E_j$ are the eigenvalues of $X^TX$.

If we have the SVD decomposition of $X$ we can express these eigenvalues in term of the diagonal elements $\epsilon_j$ of $\Sigma$:

$${\rm Tr}(\sigma)=\sum E_j = \sum_j \epsilon_j^2$$Explained variance¶

When we only consider the $k$ principal axis of a dataset we will loose some of the variance of the dataset.

Assuming the eigenvalues are ordered in size we have

$$\sigma_k\equiv {\rm{Tr}}(X_k^T X_k) = \sum\limits_{j=1}^k \epsilon_j^2$$$\sigma_k$ is the variance our reduced dataset retained from the original, it is often referred as the explained variance.

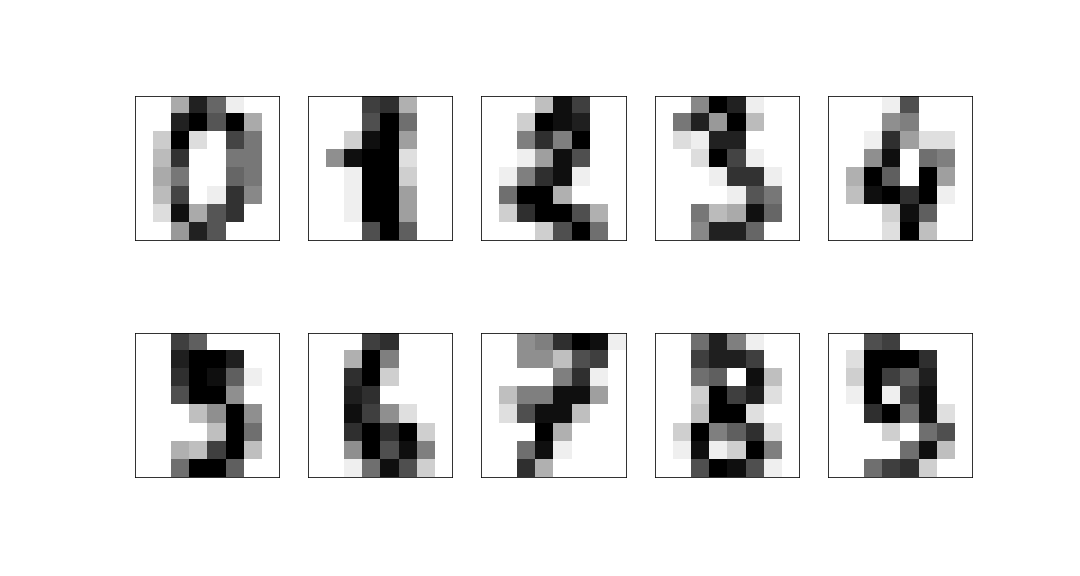

Example: 8x8 digits pictures¶

We consider a dataset of handwritten digits, compressed to an 8x8 image:

These have a 64-dimensional space but this is clearly far larger than the true dimension of the space:

- only a very limited subset of 8x8 pictures represent digits

- the corners are largely irrelevant hardly ever used

- digits are lines, so there is a large correlation between neighbouring pixels.

PCA should help us limit our features to things that are likely to be relevant.

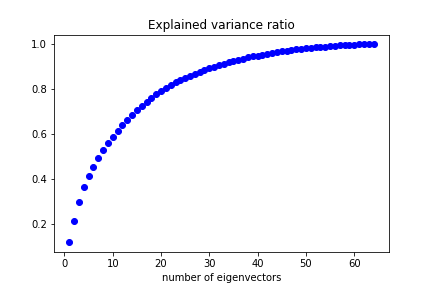

Performing PCA we can see how many eigenvectors are needed to reproduce a given fraction of the dataset variance:

We can keep 50% of the dataset variance with less than 10 features.

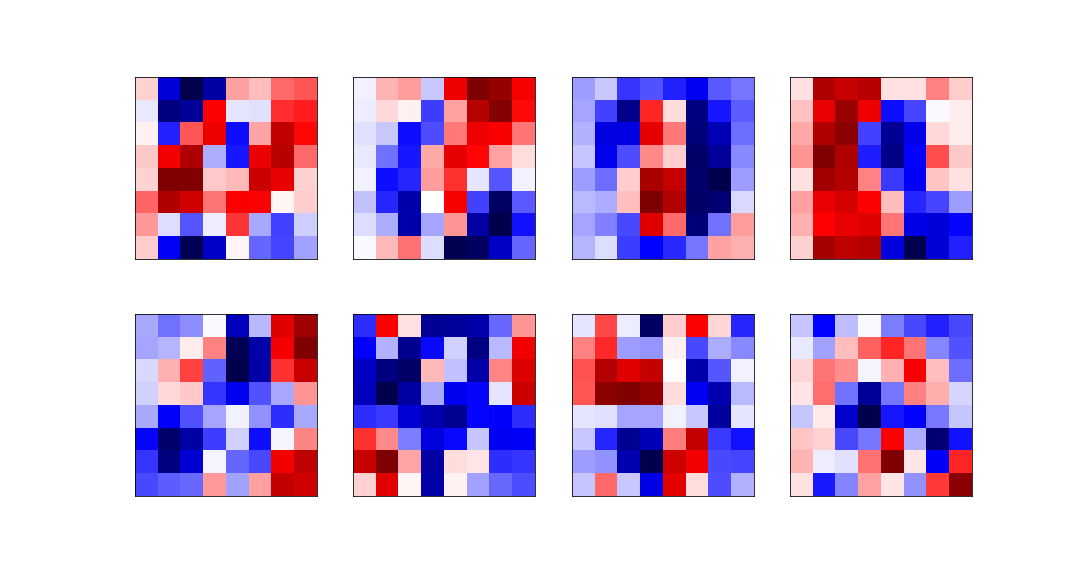

The eight most relevant eigenvectors are:

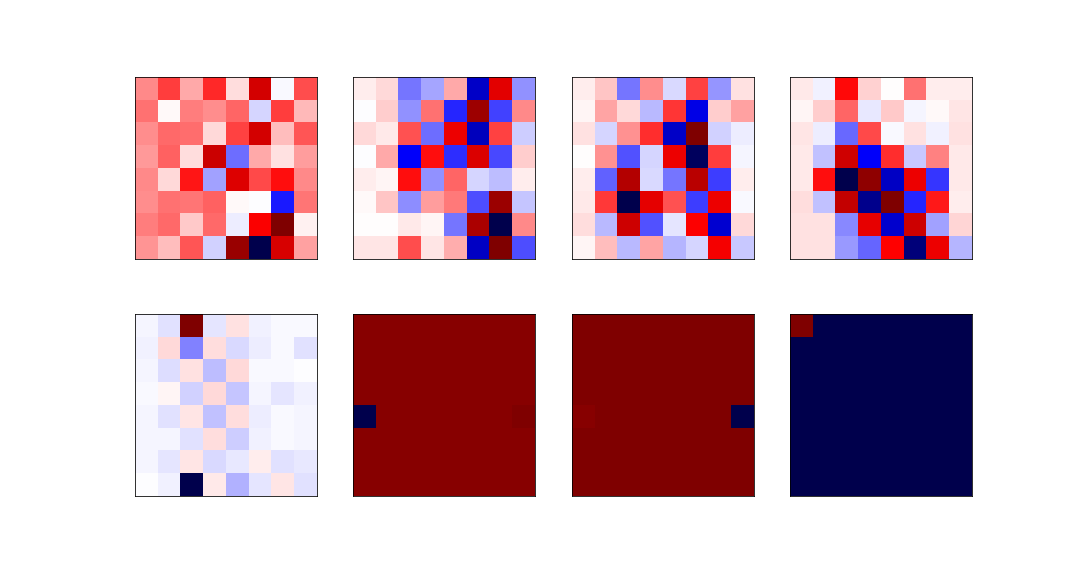

The least relevant eigenvectors are:

Data visualisation¶

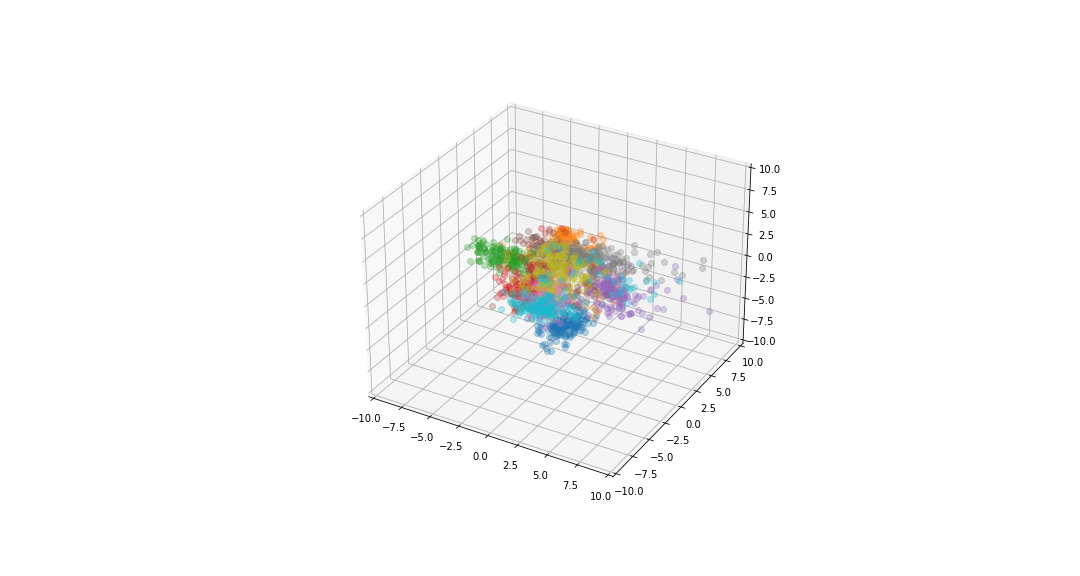

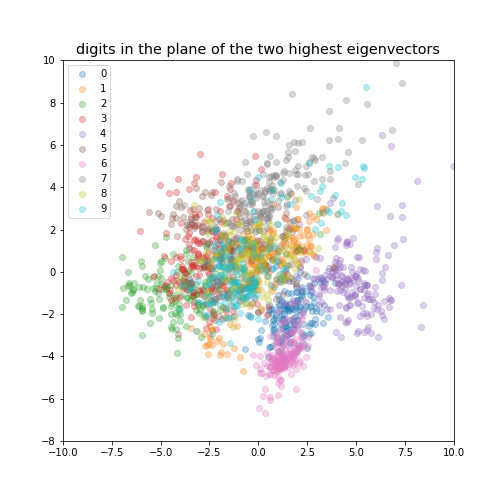

If we reduce the data to be 2-dimensional or 3-dimensional we can get a visualisation of the data.

digits in the plane of the two highest eigenvectors¶